“Tell me about a time when you disagreed with a coworker…” Hiring managers use questions like this to get a sense of how a job candidate will handle disagreements and work with others under difficult circumstances.

It’s a complicated topic for user experience, where ideas are assumptions to be validated and opinions are all on equal footing. In the business of measuring “better,” we’re expected to think critically and argue from reason.

If you’re lucky enough to work with an experienced and diverse group of people, opinions will vary and disagreements will be the norm. The problem is that fear of controversy can make people less likely to engage in the kinds of useful debates that lead to good designs.

Creating a culture of user experience involves asking uncomfortable questions; the key is to navigate that friction so that people feel encouraged not just to contribute but also to question ideas.

A/B testing is a good way to help teams separate concerns and learn to disagree constructively. Minutia gets sorted out quickly, the work moves forward, and most importantly you help create a framework for challenging ideas, not people.

A/B Testing is fast, good and cheap

Decisions aren’t always obvious, and you may not have the luxury of an executive decision-maker to break an impasse. More often than not, you have to work with people to rationalise a direction and find your way out of the weeds.

The exercise of running an A/B test can help disentangle design intent from mode of execution. When stakeholders see how easy it is to separate fact from fiction, there’s less fear of being wrong and ideas can flow and be rejected, developed or improved upon more freely.

Another perk of A/B testing is that platforms like Optimizely or VWO let you run experiments with live users on your website. “In the wild” testing give stakeholders a chance to see for themselves how their ideas stand to impact customer reality.

It’s now easier than ever to design and deploy low-risk A/B experiments, and there’s no excuse not to do it. But like any tool, A/B testing has its limitations – and no product has a warranty that protects against misuse.

Draws are boring, fans want KOs

What happens when an A/B test fails to deliver clear results?

A/B testing software is often marketed around dramatic examples that show impactful decisions made easy through testing. Stakeholders may be conditioned to think of testing in terms of winners and losers, and experiments that don’t produce clear outcomes can create more questions than answers:

“We A/B tested it, but there was no difference, so it was a waste…”

A lack of familiarity with the domain can lead to criticism of the method itself, rather than its use. This “carpenter blaming the tools” mentality can disrupt stakeholders’ ability to work harmoniously – and that is not the kind of conflict that is constructive.

The reality is that not every A/B test will yield an obvious winner, and this has partly to do with how experiments are designed. For better or worse, tools like VerifyApp now make it easy to design and deploy tests. Like anything else, it’s garbage in, garbage out – and there’s no sample size large enough to turn a noisy experiment into actionable insights.

Such heartburn is only made worse when teams undertake A/B testing without a clear sense of purpose. A/B tests that aren’t designed to answer questions defy consistent interpretation, and only add to the gridlock of subjective analysis.

As an example, I’ll use a topic which I think is well suited for A/B testing: call-to-action buttons.

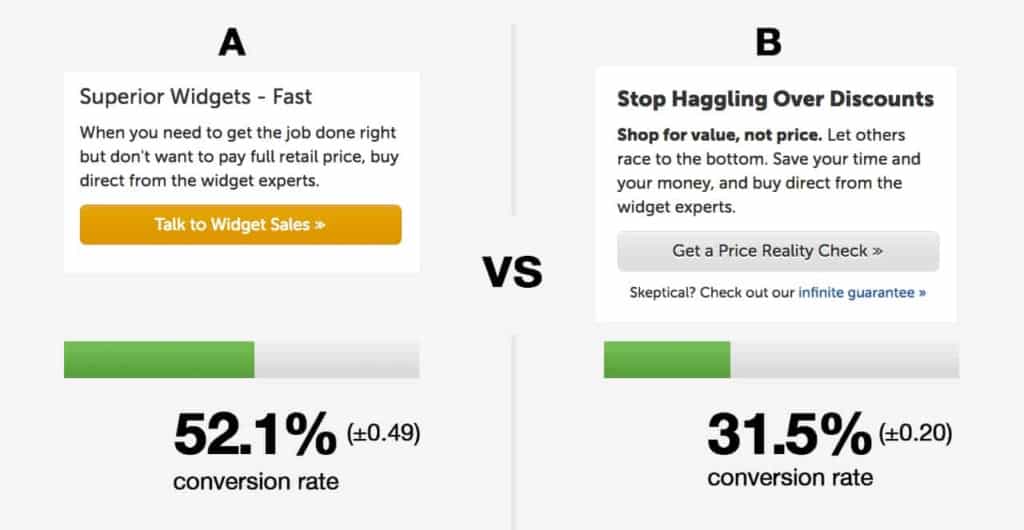

Consider the following experiment between 2 versions of the same call-to-action. At a glance, the outcome may seem clear:

What makes this test result problematic is that there are multiple design-related differences (font weight, content, copy length, button colour) between A and B. So tactically, we know one approach may convert better, but we don’t really know why. Ultimately, this experiment asks the question:

“Which converts better?”

…and only narrows it down to at least 4 variables.

When you’re new to A/B testing, a few noisy experiments are a forgivable offence and you may find you get more traction by focusing on what stakeholders are doing right. Any testing is better than none, but habits form quickly, so it’s important to use early opportunities like this to coach people on how experimental design affects our ability to get answers.

Another reason pairwise experiments don’t always have clear “winners” is because sometimes, there’s just no difference. A statistical tie is not a sexy way to market A/B testing tools. Consider another hypothetical example:

Notice that there’s only 1 difference between A and B – the button text label. Is one version better than the other? Not really. It’s probably safe for us to conclude that, for the same experimental conditions, the choice between these 2 text labels doesn’t really impact conversion rate.

Does a stalemate make for a compelling narrative? Maybe not – but now we know something we didn’t before we conducted this anticlimactic experiment.

So while a tie can be valid experimentally, it may not help defuse some of the emotionally charged debates that get waged over design details. That is why it’s so critical to approach A/B testing as a way to answer stakeholder questions.

Want good answers? Ask good questions

When the work is being pulled in different directions, A/B testing can deliver quick relief. The challenge is that the experiments are only as good as the questions are designed to answer.

With a little coaching, it’s not difficult to help teams rely less on subjective interpretation and wage more intelligent arguments that pit idea vs. idea. It falls on UX teams to champion critical thinking, and coach others on how to consider design ideas as cause-and-effect hypotheses:

Will the choice of call to action button colour impact conversion rates?

Does photograph-based vs. illustrated hero artwork impact ad engagement?

Is Museo Sans an easier font to read than Helvetica Neue?

The act of formulating experimental questions helps to reveal the design intent behind specific ideas, and cultivates a sense of service to project vs. individual goals. This can also be done when creating a stakeholder engagement plan, for example. When stakeholders share an understanding of the intent, it’s easier to see they’re attacking the same problem different ways.

It’s also imperative to keep experiments simple, and the best way to do that is to focus on one question at a time.

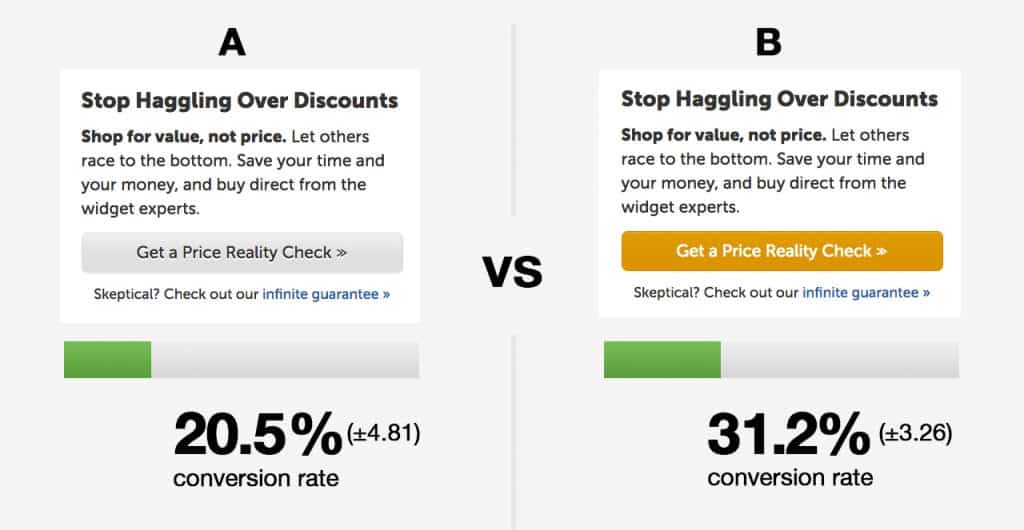

Consider the button example from earlier, where we concluded that the choice of 2 text labels had little impact on conversion rates. To see what else might move this needle, we can try to manipulate another variable, such as button colour:

This experiment asks the question:

Will the choice of call to action button colour impact conversion rates?

There’s only 1 difference between A and B – button colour. Now, we not only have an answer we can use tactically, but strategically we have a good idea why one converts better than the other.

Summary

Stakeholders won’t always see eye to eye with each other, and that’s no reason to shy away from or stifle conflict. Good ideas benefit from scrutiny, and quick A/B experiments help get people get in the habit of asking tough questions. The answers lead to better tactical decisions, and help drive a culture of healthy debate.

A/B testing is just one tactic you can use within a strategy to win over stakeholders. If you want to help your team keep each other honest, try A/B testing as a first step towards creating a culture where co-workers feel more comfortable disagreeing as a means to a constructive end.

Do you have experience using A/B testing to drive conversations with stakeholders? Share your experience in the comments or the forums.

This month, we’ve been looking at stakeholder management. Catch up on our latest posts:

This was a great article, however, I think your first and third images are in the wrong places. There’s only one variable in the first image, and there are multiple variables in the third image.

Michael, nice catch. Fixed! Thanks for reading.